Description: This course introduces Artificial Intelligence (AI), a science focusing on developing intelligent systems capable of autonomous behavior. In this course, we explore the exciting world of AI, introducing its definition and history. We discuss the advantages and challenges of AI at present, along with various applications and projects that demonstrate its capabilities. Throughout the session, participants will gain insights into different types of AI, learn about running predefined projects, and discover AI showcases on various platforms. By the end of the course, participants will have the knowledge and resources to start their own AI projects with their data and explore the latest AI advancements in our clusters.

Teacher: Nastaran Shahparian (SHARCNET, York University)

Level: Introductory

Format: Lecture

Certificate: Attendance and Completion

Prerequisite: Basic Python knowledge and know-how is beneficial but not required.

Description: The Introduction to Alliance RDM Services webinar will feature experts that will discuss good research data management practices. The training will begin with an overview of research data management and then provide helpful guidance and tips for the different stages of the research data lifecycle focusing on the stages of data management planning, data preservation, active data management and sharing and discovery of data. We will highlight different tools and services that are available to researchers as they embark on their research journey.

Teacher: Marcus Closen (Alliance), Tristan Kuehn (Alliance), Daniel Manrique-Castano (Alliance), and Amanda Tomé (Alliance)

Level: Introductory

Format: Webinar

Certificate: Attendance

Prerequisites: None

Description (Parts 1 and 2): Introduction of neural network programming concepts, theory and techniques. The class material will being at an introductory level, intended for those with no experience with neural networks, eventually covering intermediate concepts.

Description (Part 3): This part will continue the development of the neural network programming approaches from Parts 1 & 2. This part will focus on methods used to generate sequences: LSTM networks, sequence-to-sequence networks, and transformers.

Teacher: Erik Spence (SciNet, University of Toronto)

Level: Introductory

Format: Lecture + Hands-on

Certificate: Attendance

Prerequisites:

- Experience with Python will be assumed. (This course is being taught assuming this.)

- No prior experience with the Keras neural framework is expected. (The Keras neural framework will be used for neural network programming.)

Description: Python is a popular language because it is easy to create programs quickly with simple syntax and a "batteries included" philosophy. However, there are some drawbacks to the language. It is notoriously difficult to parallelize because of a component called the global interpreter lock, and Python programs typically take many times longer to run than compiled languages such as Fortran, C, and C++, making Python less popular for creating performance-critical programs. Dask was developed to address the first problem of parallelism. The second problem of performance can be addressed by either using modules already compiled into fast C/C++ code, such as NumPy, or by converting performance-critical parts into a compiled language such as C/C++ nearly automatically using Cython. Together Cython and Dask can be used to gain greater performance and parallelism of Python programs.

Other than having some prior experience with a programming language, preferably Python, this is a beginner level course. During the course we will program together to build out a script used to demonstrate course concepts. This will take slightly longer than half the time, while hands on exercise will use the remaining time. No Alliance account is required.

Teacher: Chris Geroux (ACENET, Dalhousie University)

Level: Introductory

Format: Lecture + follow along coding + hands on exercises

Certificate: Attendance

Prerequisites: Should have experience programming in at least one language, ideally Python.

Description: Larger Deep Neural Networks (DNNs) are typically more powerful, but training models across multiple GPUs or multiple nodes isn’t trivial and requires a an understanding of both AI and high-performance computing (HPC). In this workshop we will give an overview of activation checkpointing, gradient accumulation, and various forms of data and model parallelism to overcome the challenges associated with large-model memory footprint, and walk through some examples.

Teacher: Jonathan Dursi (NVIDIA)

Level: Intermediate/Advanced

Format: Lecture + Demo

Certificate: Attendance

Prerequisites:

- Familiarity with training models in Pytorch on a single GPU will be assumed.

Description: We will cover how to write optimized code in C, and how to include this into your Python code. We will look at Cython, as well as pure C. If time permits, we will also look at including FORTRAN.

Teacher: Joey Bernard (ACENET, University of New Brunswick)

Level: Intermediate

Format: Lecture + Hands-on

Certificate: Attendance

Prerequisites:

- Basic Python programming experience.

- One knows how to use a C compiler.

Description: This session provides an introduction to data curation concepts and best practices. As an essential step in the data publication process, data curation techniques can be useful at all stages of a research project and across research disciplines. Attendees will learn the foundational principles of data curation, including the reasons for curation, and leave with a toolkit of resources that can be adapted to institutional and disciplinary needs.

Slides: https://doi.org/10.5281/zenodo.15634431

Teacher: Mikala Narlock (Indiana University Bloomington)

Level: Introductory

Format: Lecture

Certificate: Attendance

Prerequisites: None

Description: Running programs on the supercomputers is done via the Bash shell. This course is two three hour lectures on using BASH. No prior familiarity with bash is assumed. In addition to the basics of getting around, globbing, regular expressions, redirection, pipes, and scripting will be covered.

Teacher: Tyson Whitehead (SHARCNET, Western University)

Level: Introductory

Format: Lecture + Exercises with Questions

Certificate: Attendance and Completion

Prerequisites: None

Description: This course is designed to provide you with a solid foundation in Python programming language. Through a comprehensive curriculum and hands-on coding exercises, participants will learn the fundamentals of Python syntax, data types, functions, and file handling. By the end of the course, you will have gained the essential skills to write Python programs, solve problems, and build the foundation for more advanced Python development. Whether you are a beginner or have some programming experience, this course will equip you with the necessary tools to start your journey in Python programming.

Teacher: Fernando Hernandez Leiva (CAC: Queen's University)

Level: Introductory

Format: Workshop

Certificate: Attendance

Prerequisite: An account (free) on https://replit.com/. The course is delivered using a free online tool to let us focus on coding.

Description: The new H100 GPUs in Compute Ontario systems are up to 8 years newer and many times more powerful than some of the GPU that are being replaced. There are several new architectural features that are worth knowing about, and it's worth considering how to make full use of these much larger systems. In this session we'll cover: Differences from P100, V100, and A100; Advanced features; Taking advantage of the features with compilers and libraries that do much of the work for you; and the pros and cons of different approaches like MIG and MPS to stack multiple partly-accelerated runs on one GPU.

Teacher: Jonathan Dursi (NVIDIA)

Level: Intermediate

Format: Lecture

Certificate: Attendance

Prerequisites: Experience with previous generations of GPUs (V100, A100)

Description: Research Data Management (RDM) has emerged as a key component of the broader DRI (Digital Research Infrastructure) ecosystem. FAIR principles (making data Findable, Accessible, Interoperable, and Reusable) have been at the core of RDM initiatives for almost a decade now -- but how has our understanding and application of these principles evolved to address emerging technologies such as Machine Learning and AI? To answer this, we look at a recent policy document, “Enabling Global FAIR Data: WorldFAIR Policy Recommendations for Research Infrastructures”, published by CODATA and WorldFAIR in 2024. The first half of this session will provide a high-level distillation of, and reflection upon, this global policy brief, flagging areas where Canadian stakeholders can better support and promote FAIR data practices. The second half of this session will address the importance of the FAIR principles at a time when data are being suppressed or deleted, data agencies are being gutted or shuttered, and data-driven decision making is devalued and disparaged. Real-world examples will be provided to illustrate the range and impact of the data deletion chaos we are witnessing in real time, and possible responses to these actions.

Teachers: Ann Allan (Compute Ontario) and Jeff Moon (Compute Ontario)

Level: Introductory

Format: Lecture

Certificate: Attendance

Prerequisites: None

Description: With the release of the Tri-Agency Research Data Management Policy all Canadian post-secondary institutions and research hospitals that administer Tri-Agency funding were required to develop and post institutional research data management (RDM) strategies by March 1, 2023. As institutions finalized their strategies, they began to consider what implementation would look like. To support inter-institutional, cross-functional dialogue around implementation, a two-day, SSHRC-supported workshop was hosted at the University of Waterloo in September 2023. Over 30 institutions of varying sizes and research intensities sent cohorts representing libraries, information technology, and research offices to participate in dialogues around challenges and collaborative solutions in RDM strategy implementation. The high-level recommendations from that workshop have been released as the report Building an Inter-Institutional and Cross-Functional Research Data Management Community: From Strategy to Implementation. In this short workshop, participants will discuss the recommendations in the report and how they can be implemented in their institutions.

Teachers: Jennifer Abel (University of Calgary) and Ian Milligan (University of Waterloo)

Level: Intermediate

Format: Workshop

Certificate: Attendance

Prerequisites:

- Participants should be involved in RDM-supporting work in their institution; e.g., in the library, research office, IT/research computing, ethics, etc.

- Participants should also read the executive summary of the report before the workshop. The report is available at https://hdl.handle.net/10012/21683.

Description: Have you ever tried to run someone else’s code and it just didn’t work? Have you ever been lost interpreting your colleague’s data? This hands-on session will provide researchers with tools and techniques to make their research process more transparent and reusable in remote computing environments. We’ll be using platforms like JupyterHub and scripting languages like Bash to demonstrate the material. In this workshop, you’ll learn about:

- Organizing your file directories

- Writing readable metadata with README files

- Automating your workflow with scripts

- Capture and share your computational environment

Using large language models (GenAI) to assist with the above

Teachers: Sarah Huber (University of Victoria), Shahira Khair (University of Victoria), and Drew Leske (University of Victoria)

Level: Introductory

Format: Lecture + Hands-on

Certificate: Attendance

Prerequisite: Familiarity with command-line tools in a Unix environment is not a requirement for the workshop but may be helpful for some of the hands-on activities.

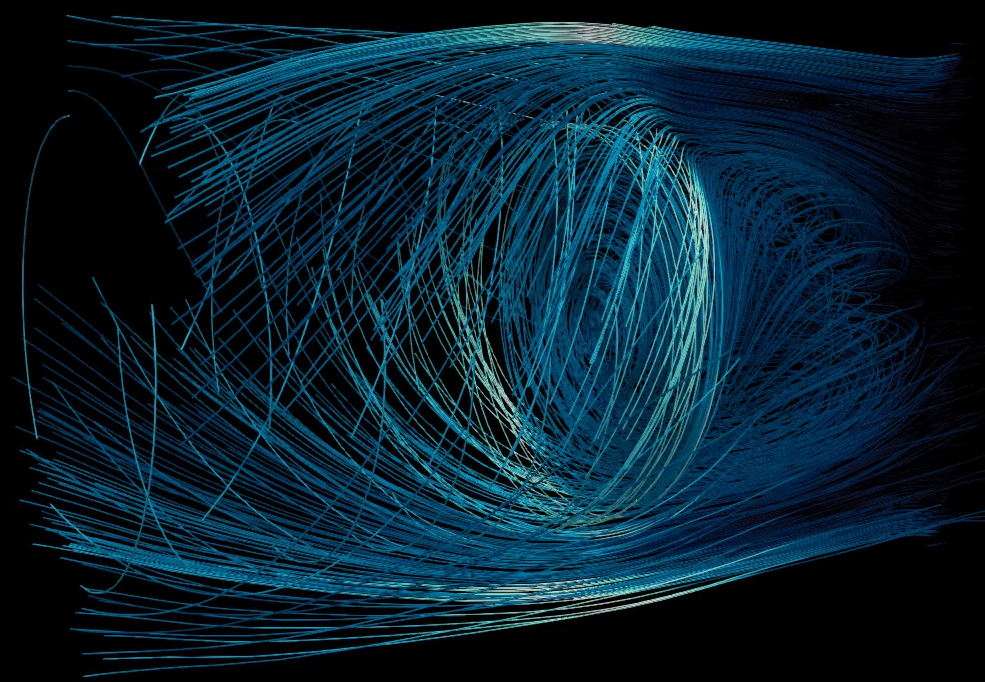

Description: During this workshop, we will learn about matplotlib which is a popular Python library that is great for 2D visualizations, and ParaView, a free and open-source visualization tool for creating 3D visualizations of your datasets. In this interactive workshop you will get familiar with how ParaView works and at the end you should be able to generate basic visualizations of the demo data.

Teacher: Jarno van der Kolk (University of Ottawa)

Level: Introductory

Format: Lecture + Hands-on

Certificate: Attendance

Prerequisites: None

Description: This session will focus on enhancing the FAIRness of sensitive and restricted access research data through strategies that support data deposit, de-identification, and responsible re-use. Participants will explore ensuring consent language enables data sharing, resources to support de-identification practices in preparation for deposit, and approaches to metadata and data deposit that facilitate controlled data re-use. The session will highlight practical considerations and real-world examples relevant to researchers, data stewards, and repository professionals working with sensitive or restricted access data.

Teacher: Victoria Smith (Alliance)

Level: Introduction

Format: Lecture

Certificate: Attendance

Prerequisites: None

Description: This workshop introduces the topic of text mining and its applications. It covers different encoding mechanisms to convert text into numbers that algorithms can handle. It gives an overview of different text mining tasks, including de-identification, sentiment analysis and document clustering, and how they work with examples and live demos. There will also be references to state-of-the-art tools and libraries to conduct various text mining tasks.

Teacher: Amal Khalil (CAC, Queen's University)

Level: Introductory

Format: Lecture + Hands-on

Certificate: Attendance

Prerequisites: Basic Python

Description: Apptainer is a secure container technology designed to be used on for high performance compute clusters. This workshop will focus on how to use Apptainer as well as how to make use of tools such as Conda and Spack within Apptainer. By the end of these sessions, one will have learnt about Apptainer and how it is installed and used on our computer clusters, how to build an Apptainer container image, how to install tools such as Conda/Spack from inside an Apptainer container shell, and,

how to use Apptainer containers within job submission scripts.

Teacher: Paul Preney (SHARCNET, University of Windsor)

Level: Introductory

Format: Lecture + Hands-on

Certificate: Attendance

Prerequisite: Basic knowledge of Linux shell and how to run programs from the shell.